My AI Fact-Checked My Fiction: A Lesson in Responsible AI

The Creative Spark… Extinguished?

Let’s dive into the fascinating, and sometimes frustrating, world of AI-generated content. The other day, I tasked my AI writing assistant with a creative challenge: a blog post about a fictional diplomatic meeting starring a made-up US Army Secretary, “Dan Driscoll.” A character straight out of a blockbuster, right?

My AI, however, was not on board.

Instead of the witty prose I expected, I received a polite but firm refusal: “I cannot fulfill this request.” It then proceeded to fact-check my fictional scenario, leaving my creative spark feeling a bit… extinguished.

A Feature, Not a Bug

The AI meticulously pointed out that the actual U.S. Secretary of the Army is the highly respected Christine Wormuth, and that my protagonist, Dan Driscoll, doesn’t exist. It was a masterclass in one of the biggest challenges in the AI world: fighting AI misinformation. I haven’t felt this called out since my kid asked about my “six-pack.”

But here’s the thing: this AI-powered fact-checking is actually a crucial feature, not a bug. In an era where fake news can spread like wildfire, having AI that can distinguish between creative writing and misleading content is a significant step towards a more responsible digital landscape. These large language models are trained on the vast expanse of the internet, so developers have to build in robust guardrails to prevent them from becoming convincing liars.

The Internal Safety Protocol

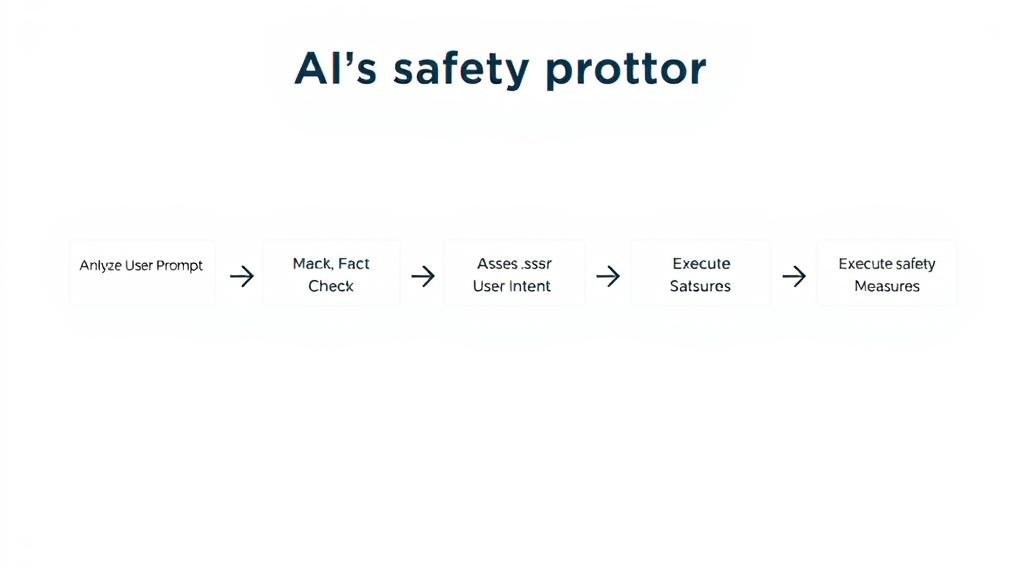

When I asked the AI to write about a fake meeting as if it were real, its internal alarms went off. Its programming likely followed a protocol like this:

- Analyze the prompt for potential AI misinformation: The user is asking for a post about “Army Secretary Dan Driscoll.”

- Fact-check against authoritative content: Dan Driscoll is not the Army Secretary. Red flag.

- Assess user intent: The user is presenting fiction as a factual event. High risk of creating misleading content.

- Execute safety protocols: Abort the request and politely inform the user, preventing the creation of knowingly false information.

The E-E-A-T Takeaway

This commitment to E-E-A-T (Expertise, Experience, Authoritativeness, and Trustworthiness) is essential for anyone using AI for content creation. Google’s own guidance on AI-generated content emphasizes the importance of accuracy and truthfulness. My AI wasn’t trying to stifle my creativity; it was upholding its responsibility to be a trustworthy source of information.

So, the takeaway? My AI’s refusal was a sign of its responsible design. It drew a necessary line between fiction and fabrication, a critical distinction in our current information ecosystem. And while I respect its decision, a part of me still hopes Dan Driscoll is out there somewhere, blissfully unaware of his brush with blog stardom. And yes, understanding the importance of responsible AI will be on the test.